Nvidia: Paving The Way For Rapid Growth Through AI Democratization

Please subscribe for future content or follow me on Twitter (@moneyflowinvest) if you find this article valuable!

Article Date - 06/21/2022

Price per share (Open) $158.80The Age of Artificial Intelligence is upon us. Nvidia (NVDA) is leading the revolution through its build-out of a comprehensive accelerated compute platform, laying the foundation for democratizing AI for thousands of businesses. This is a substantial opportunity as its AI enterprise solution has the potential to support trillions of dollars of end markets and generate billions in high-margin revenue for shareholders. Despite only launching the licensed software product last year, the company is already gaining momentum with large corporations signing up to its ecosystem across a diverse set of industries. Every license that it sells will spin the flywheel faster and bolster its durable economic moat. In my view, the market is not adequately accounting for this future growth.

Yeah, but why Nvidia?

You may believe that AI will proliferate across industries and radically change the world. It still begs the question, why is Nvidia the business that will emerge as a prime beneficiary? You may ask, “Yeah, I understand AI and accelerated computing is promising but isn’t the market flooded with a sea of competitors vying to capture profits? How can you possibly select the winner?” This is a great question.

In a memo written immediately before the dot-com crash, Howard Marks, the founder of Oaktree Capital, addressed the problem of picking winners in an industry whose technology promises to transform society.

Of course, the entire furor over technology, e-commerce and telecom stocks stems from the companies' potential to change the world. I have absolutely no doubt that these movements are revolutionizing life as we know it, or that they will leave the world almost unrecognizable from what it was only a few years ago. The challenge lies in figuring out who the winners will be, and what a piece of them is really worth today.

Howard Marks, Bubble.com

Nvidia will generate significantly more cash flows in the future not only because it is in a quickly growing industry but instead because it is hyper-focused on fortifying a powerful economic moat with tremendous economies of scale. For instance, it is the only supplier that can ship a turnkey data center system with a fully unified architecture. The breadth and depth of its solution are unparalleled in the market. The physical components are fully programable with Nvidia’s proprietary software layer. This layer enables engineers to tap into the incredible horsepower of the accelerated hardware. This architecture design incentivizes its customers to take advantage of its capabilities by tightly integrating the platform with their systems and applications, thus significantly raising switching costs. Management is not shy about this strategic point of differentiation. For instance, Piper Sandler asked Nvidia’s VP of Enterprise Computing if anyone else in the space is close to delivering a complete package that is competitive with Nvidia’s offering.

But I would say we do not think so, right? But I will elaborate on that, right? If you think about the picture shown in my slide, the point we made was this is really a full stack problem from the piece parts of the hardware to the systems, to the low level of software, to the frameworks on top. We're the only company in the planet that has been working on all of these limits. We believe we are really the only company in the planet, Harsh, that has focused on the entire stack, right? And that's why we need to really optimize it and tailor it for these businesses.

Manuvir Das, Piper Sandler 2021 Virtual Global Technology Conference

This full-stack solution includes GPUs, CPUs, DPUs, SOCs, lightning-fast networking interconnect equipment, SDKs, libraries, virtual worlds simulations, AI models, software services, and more. These components have enabled Nvidia to deliver exceptional performance and consistently destroy the competition in MLPerf AI Benchmarks.

Furthermore, the company has an exceptional founder and CEO at its helm who has the vision and relentless drive to succeed and push the boundaries.

Please check out my write-up below for a thorough deep dive into Nvidia’s moat.

The Proof is in the Numbers

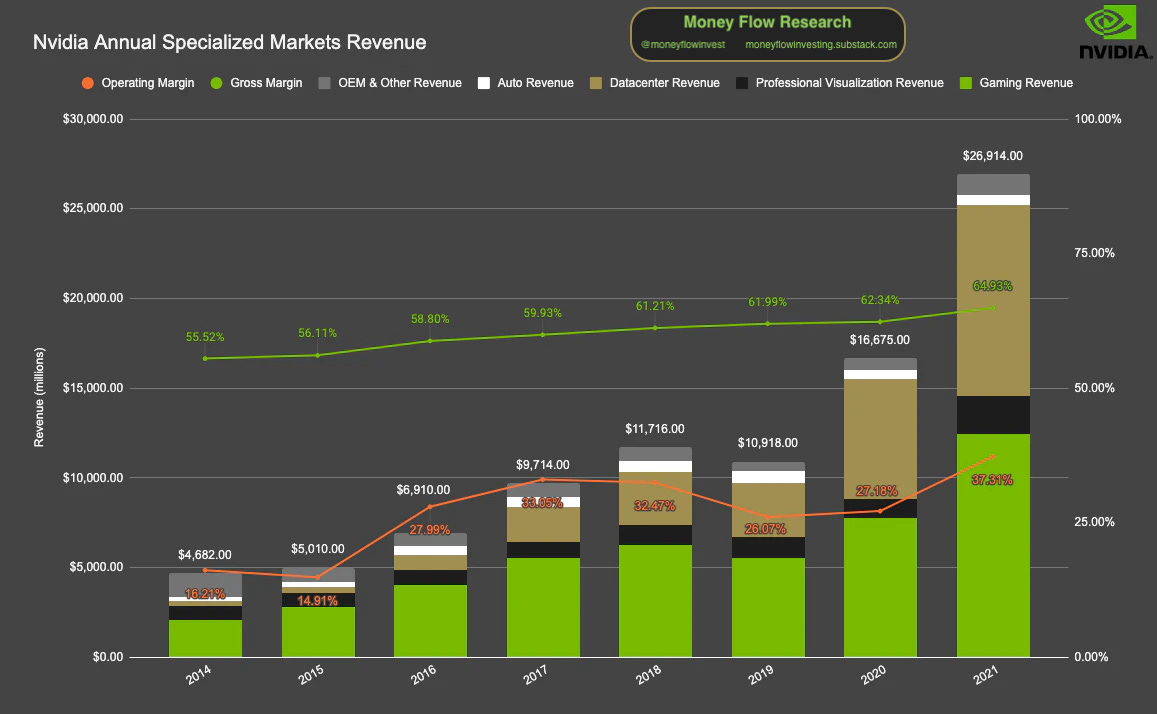

Nvidia’s operating results demonstrate the expanding economies of scale. By far, the most significant contribution of profits has come from the Datacenter and Gaming segments, which are a combined ~86% of total revenues.

Gaming is the company’s bread and butter. It boasts a high degree of penetration in the market with a 78% market share in PC discrete GPUs, over 200 million gamers using GeForce, 76% share of gamers who use its GPUs according to the latest May 2022 Steam survey, and 15 million+ GeForce NOW subscribers. This strong market leadership and a foundation for favorable overall industry growth have produced a healthy gaming revenue CAGR of 25%. Despite a tough macro environment and decrease in crypto mining revenue, the business should continue to have multiple powerful tailwinds at its back with the adoption of its RTX GPUs for its state-of-the-art ray-tracing capabilities, the rise of eSports, further market penetration of its GeForce NOW streaming service, and the growing popularity of speculative gaming through platforms like Twitch.

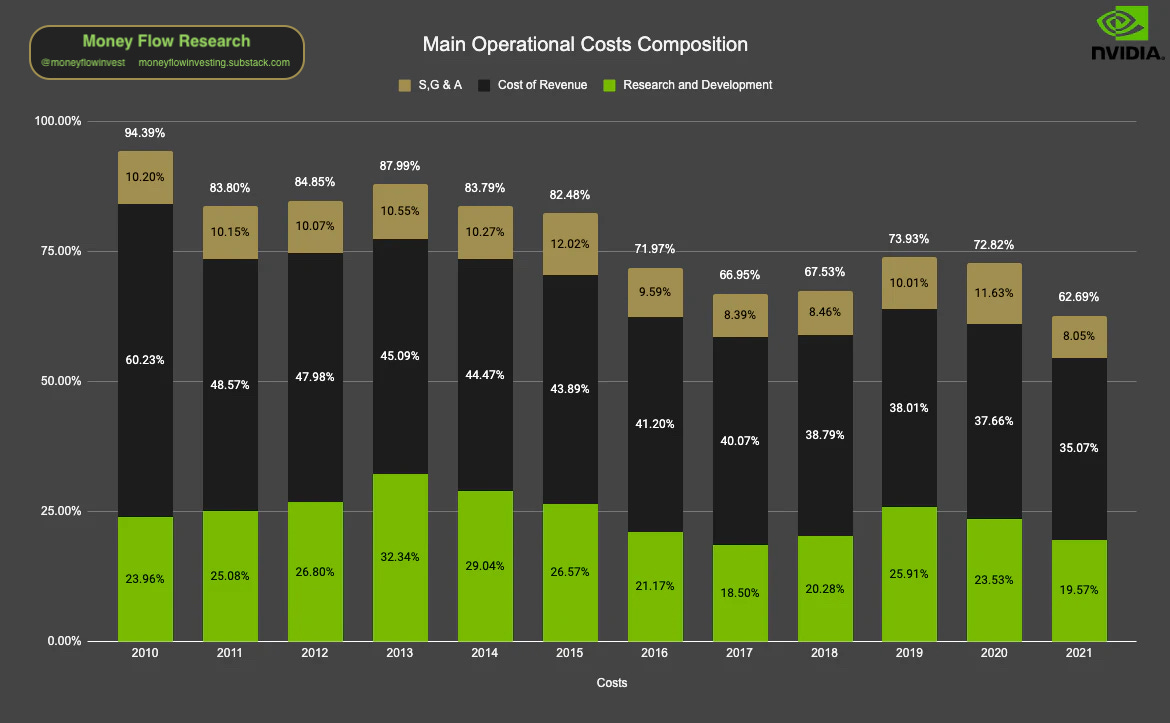

The other significant contributor to gross margins is the data center. As shown in the graphic below, its data center products have a higher gross margin profile than the rest of the business units. Thus, the seven-year 65% Data Center CAGR vs. 28% overall is mainly responsible for operating and gross margins expansion.

Much of the gains in operating margin have come from COGS reduction as a % of revenue. The gross margin should expand further as the sales mix continues to shift toward the data center and SaaS revenue. There is still room to gain further operating leverage in the mid to long term for both R&D and SGA, representing a combined 17.5% of revenue. I do not anticipate research costs showing much operating leverage within the next few years as the business invests heavily in its IP. I expect SGA costs, on the other hand, to trend downward by roughly 1-2% over the next five years.

Steady Progress Toward the Proliferation of Nvidia AI

Within the data center segment, hyperscalers (think cloud companies like AWS) and vertical enterprises continue to purchase Nvidia’s products like hotcakes. For instance, Meta announced in early 2022 that it is building out “the world’s fastest AI supercomputer” with sixteen thousand A100 Tensor Core GPUs later this year. Similarly, Tesla unveiled in 2021 that it was using nearly six thousand A100s to train deep neural networks for its autopilot system and self-driving capabilities. Additionally, all major cloud providers offer A100 Nvidia GPU-accelerated instances. The success with its hyperscaler partners is impressive, considering many of them design their own proprietary data center chips.

A considerable tailwind for the fast adoption of Nvidia AI is the rapidly increasing complexity of training and inference workloads. Major revolutionary breakthroughs within the past three years have raised the bar for computational demands. These discoveries include natural language processing and Deep Learning Recommender System (DLRM). Underpinning both of these models is the concept of a transformer. Transformers enable neural networks to learn contextually without requiring an extensive human-labeled data set, which is highly costly and time-consuming to procure. According to the company, these two areas are driving enormous investments in cloud service providers to train these sophisticated models without sacrificing speed. Furthermore, the firm announced the turbocharged H100 data center GPU at the 2022 GTC. Designed with its next-gen Hopper architecture, the H100 was architected with an advanced transformer engine to support these cutting-edge models.

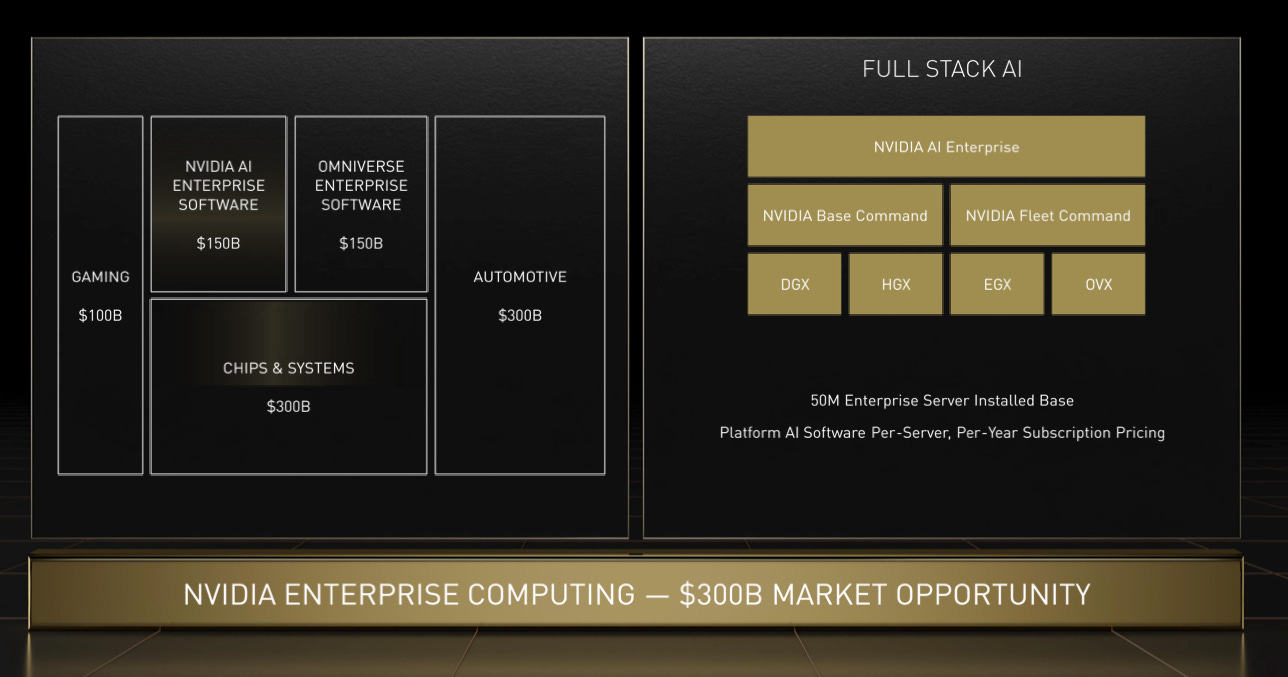

One of management’s big audacious goals is to democratize AI by making it more accessible to all businesses regardless of their technical core competencies. In this envisioned future, corporations of varying sizes will purchase licenses for AI capabilities from Nvidia, similar to how companies today buy mission-critical subscriptions to support business operations from firms such as Salesforce, Microsoft, or Adobe. To accomplish this vision and capture the estimated $150 billion market for AI Enterprise software, the CEO, Jensen Huang believes Nvidia must bundle up the software and hardware components into a complete, ready-to-use package for its customers. This is because many enterprises do not have the technical ability or resources to build proprietary AI solutions. Additionally, developing a homegrown system would require an enormous effort to stitch together the complex algorithms, system software, networking, and storage components. With the launch of its new data center AI Enterprise software services (and Omniverse Enterprise software described below), the company is one step closer to making this a reality. For instance, its newly minted Base Command and Fleet Command IT solutions orchestrate the development and deployment of AI software at the data center and the edge at scale. These services will work on top of VMWare vSphere, the de-facto data center platform. The business is addressing a critical IT infrastructure gap that will ease technical burdens and drastically lower AI adoption barriers. Nvidia’s Manuvir Das elaborated on this central pain point at the 2021 Piper Sandler Conference.

The fundamental reason is that there are two very different sets of people within every enterprise company. On the one hand, you have the data scientists. These are the people who understand AI, who understand the tools, Jupyter notebooks, all these kinds of things. They knew the development of new AI capabilities, and they move fast and they are pretty agile, and they are the state-of-the-art cutting-edge, doing new things every night.

On the other hand, you’ve got IT administrators, who are accountable and responsible for making sure that the actual applications running in the enterprise data center are safe, secure, stable because the business of the company depends on it, right, and the experience of the customer depends on it. And these two personas, these two worlds are pretty much apart because the one world of the data scientist wants to use the tools and frameworks that they are comfortable with, whereas the IT administrator is used to a different model for how to deploy applications. And there is a disconnect because IT does not know how to pick up what the data scientists produced and the data scientists don't know how to operate in a world where IT lives.

The Professional Visualization and Auto segments are still subscale and represent only ~10% of sales. I believe these business units have tremendous growth ahead of them. Management is pouring substantial resources into both segments with its Omniverse Enterprise AI and DRIVE Hyperion platforms.

On the Professional Visualization end, Nvidia is starting to ramp up its Omniverse enterprise software, which has serious potential to generate enormous incremental high-margin SaaS revenues with an estimated $150 billion market opportunity. These days, investors are understandably skeptical of the Metaverse hype. $META, for example, is hinging much of its future success on producing the metaverse. It is allocating significant resources toward that end. In 2022, investors are not inclined to pay for this upside (and potentially assign a negative valuation to the venture) considering a mere ~9.5x EV-to-EBIT for the entire business despite a high-quality core operating business gushing free cash flow.

Nvidia has a vastly different strategic position toward virtual or augmented worlds that is quickly gaining commercial traction. Management is focused on two primary opportunities in this space. The first is Omniverse for Designers, which enables creators worldwide to collaborate within 3D virtual spaces to design and build products. The second is digital twins for companies to unlock operational efficiencies and maximize productivity for client-facing and production-related processes. For example, Amazon uses digital twins to optimize digital warehouse design and flow and train intelligent robots. Kroger is utilizing it to maximize store efficiency by creating digital twins of its supermarkets to simulate and iterate over various floor plan layouts before it allocates resources to make modifications across its physical locations. According to Nvidia's VP of Omniverse, BMW is saving up to 30% on their costs with the use of a factory digital twin. In any case, Nvidia is selling its Omniverse software licenses at $9k per year for a workgroup of 2 Creators, 10 Reviewers, and 4 Omniverse Nucleus (the collaboration engine which manages asset interchange and version control) subscriptions. Management believes that this new business will spur a virtuous cycle. The idea is that license purchases will lead to more hardware sales. In turn, the increased hardware sales will boost production capacity and influence customers to purchase even more licenses. Nvidia already sees early success. For example, after announcing the product in Q2 2021, Nvidia has already signed up 10% of the world’s top 100 companies with ~200k software downloads and nearly 300 companies evaluating.

In the auto market, the company has cultivated an $11 billion pipeline and counting through 2027. Nvidia has partnerships with a handful of marquee names such as Mercedes, Jaguar, Land Rover, Volvo, Polestar, Amazon’s ZOOX, DiDi, Polestar, BYD, NIO, and more. Three of these deals have recurring revenue characteristics in which the company splits the fees from over-the-air updates to the vehicles. This revenue stream will start flowing through in 2024 and 2025 for Mercedes and Jaguar/Land Rover, respectively.

Main Risks to Thesis

Please see my Nvidia Investment Report for a more fleshed-out take on #1 and #2, plus some rebuttals.

Fast Pace of Technological Innovation Weakens Competitive Position

Generalized AI and accelerating computing solutions lose out to specialized accelerators such as ASICs, TPUs, or FPGAs.

The major cloud companies will be entirely self-sufficient and vertically integrated, producing virtually all of their chips.

A start-up arrives on the scene and disrupts the industry with superior AI hardware or business software.

Commoditization Risk

As the market for accelerated chips, components, and services moves toward saturation and maturity, there is a risk of commoditization, which would put immense pressure on margins. The semiconductor industry is rife with examples of product commoditization over time.

World War 3

Nvidia is a fabless semiconductor that outsources its production to TSMC and Samsung. The manufacturing of its products is heavily concentrated in countries such as Taiwan and China. If a worst-case scenario such as a Taiwan invasion were to play out or the scope of the Ukraine/Russia conflict was to expand to include more countries, all hell could break loose on supply chains and demand.

The Valuation of a Generational Compounder

In my view, the company has years of 25%+ compounded growth ahead of it. It is challenging for businesses to maintain such as growth rate after enjoying a 28% seven-year CAGR. Nvidia is no ordinary business. There are three main drivers for this optimistic outlook.

All four of its markets have rapidly expanding TAMs. Management believes this number amounts to $1 trillion. This number is hard to quantify since Nvidia is entering into newly formed markets that it has paved for itself. If the company could only capture 20% of this market in 8 years, it would grow > 25% per year.

Between Enterprise AI, Omniverse, GeForce NOW, and other forthcoming software services, high-margin recurring revenue will become a sizable portion of its total sales over the next 5-10 years. For enterprise software, the adoption rate will pick up momentum as companies realize the significant cost savings they can unlock through virtual simulation and leveraging AI. For GeForce NOW, gamers are drawn toward a low-monthly payment model to access the latest and greatest GeForce GPUs.

Its economic moat and competitive lead are growing noticeably wider. Management’s continued execution of constructing a sustainable competitive advantage will enable it to maintain or increase its market share and ROTC.

Bonus Reason: This thing is founder-led. Jensen Huang is an exceedingly skilled CEO on a mission to see this evolve into one of the largest companies in the world. This rare combination of passion and talent is an intangible asset not captured on the balance sheet. He also has plenty of skin in the game.

The management is aligned with its shareholders with its incentive structure. I would have also included ROCE or ROE, but the combination of revenue growth, adjusted operating income growth, and TSR (total shareholder return) will suffice. I don’t love that the company excludes share-based compensation from the adjusted operating income metric. However, I will cut the board of directors a little slack since this unfortunate practice has become an industry-wide standard and the management team has produced stellar results with this criteria over the past decade.

Ultimately, my high conviction bet is that there is a long runway ahead. Without this confidence, the stock looks optically overvalued at a 1.66% owner’s earnings yield. In my opinion, it is one of the most compelling business growth stories over the next decade.

MCX = Maintenance Capital Expenditures

The Buy-In Strategy

At $158.80 per share, the stock is slightly above my MOS target price. You can manufacture this price in two main ways.

For each lot of 100 NVDA shares, sell a mid to long-dated PUT whose effective price (exercise price - premium) <= $116. This action would bring your cost basis to ~$137. Note: The intention of selling the put option is to purchase shares and not necessarily profit off the premium.

For example, you can sell a June 16th, 2023 (361 DTE) PUT @ 137.5 for $19.77.

Scenario 1 - You get exercised: You will have 200 NVDA shares for $137. Congrats! You are the owner of a wonderful company with many years of compounding ahead.

Scenario 2 - You don’t get exercised: You earned an annualized return of 17.02% on your PUT [($19.77 premium / $117.73 risk capital) * (365 days / 360 DTE)] and lowered your cost basis to $139.

Don’t immediately buy shares and simply sell puts. Generate a premium while you wait for the price to drop further potentially.

For example, you can sell an August 19th, 2022 (59 DTE) PUT @ 145 for $9.28.

Scenario 1 - You get exercised: You will have 100 NVDA shares for $135.72. Congrats! You are the owner of a wonderful company with many years of compounding ahead.

Scenario 2 - You don’t get exercised: You earned an annualized return of 42% on your PUT [($9.28 premium / $135.72 risk capital) * (365 days / 59 DTE)] and lowered your cost basis for when you eventually (hopefully) purchase the shares.

More advanced bonus strategy: You can sell Bull Put Spreads out of the money. If the stock drops below your short strike, you can sell to close your long strike to make a premium and lower your cost basis for the shares. This strategy will enable you to decrease the amount of collateral you need for your trade until you are ready to purchase shares.

Whatever route you choose, I advise waiting to sell Puts for when the stock drops and the implied volatility is high. Waiting patiently for this to occur will present you with a better deal.

What do you think? Comment below if you agree or disagree with my thesis or if you have any other thoughts to share.

Please do your homework. This article is for education and entertainment only and should not be taken as investment advice.

Thanks for reading Money Flow Investing! If you find this content valuable, subscribe to receive future write-ups