Nvidia Investment Thesis Report

Overview

Nvidia (NVDA) is an accelerated computing company that intersects the semiconductor and software industries. The CEO, Jensen Huang, founded the company in 1993, and six years later, his team invented the GPU. It has transformed from a GPU and gaming business into a general-purpose computing platform. Its supercharged processors allow high-performance parallel processing through hundreds of advanced embedded cores. Over a decade ago, management realized that its chips are a perfect fit for processing large amounts of data required in artificial intelligence applications. As a result, it invested billions of dollars into R&D and M&A to expand into other industries and use cases. The business generates substantial cash flow at a high margin since its customers are willing to pay rich premiums to have the best computational performance available for its own needs.

Industry Research

Nvidia is going against the grain in the traditional semiconductor industry by differentiating itself with its full-stack compute strategy. Through its acquisition of Mellanox and aggressive hiring of top software talent, the business is positioning itself as the leading solution for accelerating data processing. A common issue semiconductor companies run into is producing commoditized silicon chips that become undifferentiated. Nvidia’s proprietary full-stack solution avoids this commoditization by adding substantial value for businesses looking to gain an edge over their competitors.

The semiconductor business has regular cycles of under-capacity and over-capacity. Nvidia experienced the latter in 2018 when an oversupply in desktop gaming GPUs caused the company to reduce shipments to manufacturers to allow the channel inventory to attenuate. In 2022, the industry is dealing with a severe widespread chip shortage that constrains silicon wafer production. Nvidia is a fabless semiconductor company, which means that it outsources the actual chip production to its partners instead of manufacturing in-house. Management is currently mitigating the shortage by securing long-term commitments from suppliers as part of its dual-fab strategy. Nvidia capitalizes on multiple secular growth trends that increasingly lessen the impact of these cyclical fluctuations.

Mission

NVIDIA’s mission is intriguing because its products and services push the boundaries of human innovation and ingenuity. This outsized influence has significant real-world implications in many areas, including making manufacturing processes more efficient, reducing operational costs, expediting scientific discoveries, discovering new drugs, developing smarter cities, and much more. With a seven-year Return On Tangible Capital (Owner’s Earnings reduced by SBC) of approximately 29%, management is operationally laser-focused and is especially disciplined in capital allocation. It is encouraging that the CEO is also the company’s founder. While a founder-led company is not always beneficial for a company’s long-term development, it is evident that Jensen is the right person for the job. His goal is more than simply accumulating personal wealth. Jensen’s big audacious goal is to build an enduring company that transforms the world through its computing platform. In the 2021 GTC, he emphatically stated: “Scientists, researchers, developers, and creators are using NVIDIA to do amazing things… we’re thrilled by the growth of the ecosystem we’re building together, and we’ll continue to put our heart and soul into advancing it. Building tools for the Davincis of our time is our purpose. And in doing so, we also help create the future.” He is truly passionate about fulfilling his vision to transform every industry with Nvidia AI.

Nvidia’s Moats

Nvidia competes with accelerated computing and semiconductor businesses that develop processors such as GPUS, CPUs, FPGAs, ASICs, and other system-on-a-chip (SOCs). Its competitors include Ambarella, AMD, Broadcom, Intel, Qualcomm, Renesas, Samsung, Texas Instruments, and Xilinx. Nvidia also competes with its cloud customers since there is an industry trend toward tapered vertical integration to build specialized in-house chips for a subset of operations. As the company progressively forward integrates into software, it will also compete with companies that sell AI software.

Nvidia’s long-term financial metrics are sound indicators of the presence of its durable competitive advantages. The business has a seven-year free cash flow (reduced by SBC) growth rate of 38.55%. It is also growing GAAP sales and net income at a torrid clip of 28.38% and 47.88%, respectively. Tangible shareholder equity expanded by a CAGR of 37.48%. The enterprise accomplished this fast growth while maintaining a conservative capital structure with a positive net cash position.

Tollbooth Moat ( Very Strong and growing)

Nvidia offers its customers a best-in-class accelerated computational platform. The company is in a league of its own as no one else can offer this unique value proposition. Artificial Intelligence is becoming increasingly necessary for business operations to remain competitive in their respective industries. Businesses will need to either develop their homegrown solutions or outsource to a partner. While a high-performance computing (HPC) corporation may have the technical competencies to create the technology from the ground up, many enterprises do not have this luxury. While HPCs often have an army of engineers building in-house solutions, they still rely on suppliers. For example, all of the major cloud companies partner with Nvidia. The company commanded an 80% market share globally for AI processors used in data centers in 2020. Through several powerful competitive advantages, Nvidia has a virtual monopoly in the industry. There are two main reasons for its tollbooth moat. The first is its superior offering through the platform approach compared to what its competitors can deliver. The second is the massive economies of scale that stifle competition. Let us delve into each point.

First, Nvidia is virtually the only company that can deliver a complete full-stack system ranging from market-leading processors and networking components to tightly integrated software. There is simply no other business in the industry that offers the overall performance and value for the money. Enterprises spend substantial capital to stay ahead of the curve or maintain their competitive positions through artificial intelligence and data processing. According to IDC, worldwide spending on AI will reach $110 billion by 2024. Use cases such as conversational AI, shopping recommendations, self-driving vehicles, efficient logistics, and smart manufacturing are crucial investment areas. Nvidia’s processing prowess is second to none. Its accelerators are consistently top performers in MLPerf benchmarks. The company is also building a robust software service suite that enables enterprises to leverage AI to solve fundamental business problems. Traditionally non-tech businesses that want to use AI typically do not have the necessary core competencies to develop complete in-house systems. They need to work with partners that provide hardware, integrated software, and deep technical expertise to make it work. Since Nvidia offers these components as part of a complete platform, a buyer can reduce its costs and number of suppliers through a partnership. As part of its strategy, management takes a holistic and generalized approach to deliver market-leading customer solutions. Nvidia’s VP of Accelerated Computing underscored this point.

“NVIDIA has shifted into a company that’s thinking about the data center as a compute and that includes not just our GPUs but the systems, the networking, increasing the CPUs and how they all fit together in a broader software stack in the workflow.”

Nvidia also designed its architecture for modularity, which enables customers to pick and choose the individual components that fit their needs.

Second, Nvidia’s economies of scale give it tremendous operating leverage. This reality makes it difficult for a current competitor or prospective entrant to contend. The level of R&D spending and capital expenditures in developing a full-stack compute processing platform are significant barriers to entry. For instance, Nvidia’s three-year R&D and capital expenditures total from the last three years are a combined $14.614 billion. On the other hand, AMD spent around $7.192 billion, or ~51% less. There are also hidden assets that a competitor would have to replicate, such as the off-balance expertise and proprietary knowledge forged over three decades. As a result, a company would have to spend more money than indicated on the balance sheet to catch up. Although Intel has considerable resources that it could deploy to compete directly, it ties up a large percentage of its available capital in manufacturing and tangential markets. While it is currently developing a homegrown GPU, it is not certain that Intel can execute and win a significant market share in this new domain.

Another off-balance sheet asset that is not easily replicable is its robust developer ecosystem and institutional influence. In 2006, the company invented CUDA, a proprietary parallel computing API. It has been downloaded over 30 million times with an ecosystem of roughly 3 million developers. Many top universities such as Harvard, Dartmouth, Stanford, and others incorporate CUDA in their class curriculum. This acceptance has helped form a virtuous cycle since the pervasive academic support incentivizes tech companies to use Nvidia’s proprietary technology. As more employers utilize the technology, more educational institutions incorporate it into their curricula. Businesses such as Autodesk, Microsoft, and Apple have leveraged a similar dynamic to dominate their respective industries.

Lastly, the company’s products benefit from tremendous cost efficiencies at scale through a unified architecture. In the 2020 Annual Report, Nvidia states: “a substantial amount of our inventories is maintained as semi-finished products that can be leveraged across a wide range of our processors to balance our customer demands.” In other words, it has effectively reduced its need to tie up a significant amount of cash into inventory and working capital since many of its products contain the same essential raw materials. Likewise, on the software side, it can “support several multi-billion dollar end markets with the same underlying technology by using a variety of software stacks developed either internally or by third-party developers and partners.” This operating leverage is ultimately made possible through its disciplined design approach to use the same underlying architecture.

Brand Moat (Very Strong and growing)

Nvidia positioned its brand as the premium choice for accelerated computing. Procuring the best product on the market is essential for customers with complex business problems that require substantial processing capability. The business has strong pricing power, evidenced by its seven-year ~60% gross margin average. While there are other alternative products to choose from, Nvidia’s processors offer the most bang-for-your-buck. For instance, its premium DGX station is $149,000. The company claims it would take 11,000 CPU servers in a 250 rack data center to achieve the same bandwidth. This setup would cost more than 6x and consume around 15x the space.

Buyers are overwhelmingly selecting its products over its peers in multiple end markets. For example, Nvidia boasts an 83% share in the discrete GPU market. The next closest is AMD at 17%. Likewise, Steam’s hardware survey in 2021 shows that 77% of gamers use its chips. It has over 200 million gamers using GeForce with over 80% market share. This domination makes sense considering the highly competitive nature of gaming, where using second-rate graphics processors is detrimental to success. In data centers, Nvidia has an 80% share of AI-focused accelerators. As of 2019, Nvidia supplies 97% of all AI-accelerated hardware used by the top 4 cloud providers. This strength extends into professional visualization as it holds a 90%+ market share in graphics for workstations.

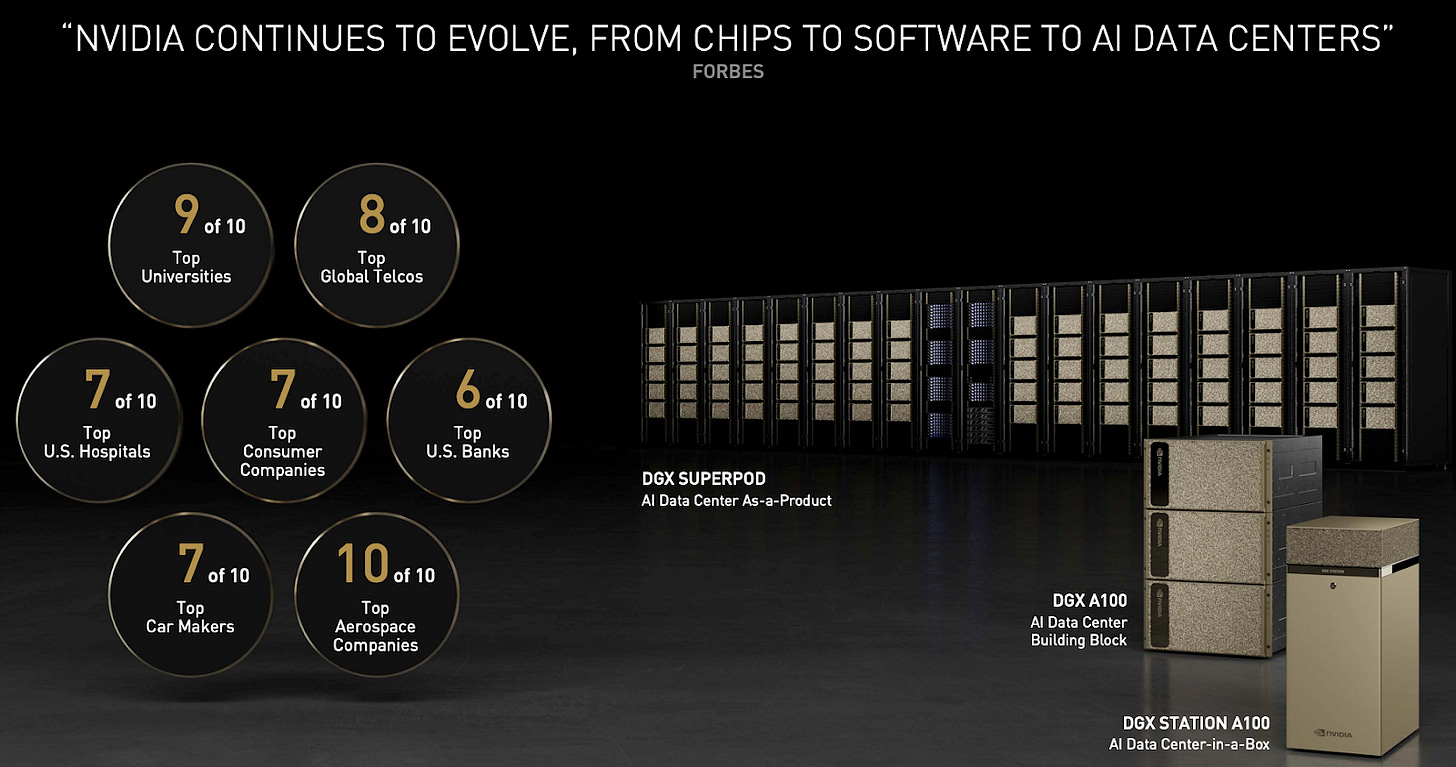

High penetration rates within the top institutions are good indicators that a brand’s product is the industry standard within their respective markets. For instance, within the top 10 lists for each industry, its premium DGX system employed to train complex neural networks is used in the top 9 universities, 6 US banks, seven carmakers, ten aerospace companies, and seven consumer companies. This potent combination of market leadership and mind-share is a profound advantage for Nvidia. Al Ries and Jack Trout underscored the human propensity to continually select trusted brands: “Almost all the advantages accrue to the leader. In the absence of any strong reasons to the contrary, consumers will probably select the same brand for their next purchase as they selected for their last purchase.” People generally tend to stick to what they know and trust when faced with multiple options. The principle has allowed Coca-Cola and Disney to dominate for decades. Nvidia is cultivating a similar brand affinity with its customers.

Switching Moat ( Narrow and growing)

The company’s full-stack approach is raising switching costs for its buyers. Nvidia created a valuable ecosystem that benefits its customers the more they integrate. For example, many customers that purchase hardware also use Nvidia’s software offerings. This layer includes sophisticated application frameworks, apps, tools, and infrastructure software. Nvidia’s proprietary code is not compatible with its competitors’ hardware. Therefore, customers that leverage the software increase the costs and operational complexity required to move to another system. Companies such as Microsoft and Salesforce have greatly benefited from a similar dynamic. Jensen underscored this platform stickiness on an investor conference call. He emphasized that it is not easy for developers to port their AI workloads to other systems since there is a dependency that their system creates, rendering it beneficial to stay on the platform.

There are several costs involved when migrating away from its platform. The list includes finding an entirely new solution, hiring new engineers with different skill sets, teaching engineers a new system, rewriting the existing code, or developing an application from scratch. These are all immense exit barriers. Engineers who attempt to migrate away from the ecosystem must often decouple from hardware and software. This endeavor is no simple task. At Deutsche Bank’s Autotech Conference, the VP and GM of the Automotive unit accentuated the Nvidia Drive platform’s power to lock in customers.

“Everything is connected to this Hyperion platform architecture. And so when we have customers -- if they are aligned to that Hyperion architecture, we can help them across all of the stacks. And we design our platform so it's easy to go from one generation to the next. So you could have an architecture based on a Xavier computer. You can remove that Xavier computer and slot in a form factor compatible or in computer into your vehicle. And we design our software such that it's API compatible. So it's really easy to transition from generation to generation and leverage your investment not just across your cars at one generation but across generations.”

A customer who leaves loses the comprehensive multi-generational product support Nvidia’s solution provides. Designing its platform for reverse compatibility and upgradability enables partners to leverage their capital investment across multiple product generations. This idea is similar to buying an iPhone. Then subsequently uploading personal data to iCloud and purchasing digital products and services. These investments incentivize the customer to continue to stick with Apple in the future.

Management

Quality of Leadership

Jensen Huang founded NVIDIA in 1993. His execution in pivoting from a GPU gaming business to a computing platform is phenomenal. This transition was not a simple undertaking. The executive team carefully decided which end markets to target and then devoted substantial resources toward research and development, including hiring the right people. It found experts and specialists in each field to understand the needs of each market. Then engineers were required to build out the products and services to address the vast number of use cases under a singular platform architecture.

The CEO demonstrates a remarkable ability to focus and prioritize long-term durability. One decision that particularly stood out was his determination in 2015 to exit the mobile computing space. While there was some opportunity to increase sales, smartphone chips had become an undifferentiated commodity. He was not interested in a business with little room to add value. This situation is a positive signal of astute management skills since it indicates that he will not pursue revenue at any cost. He consistently emphasizes prioritizing the long-term over short-term gain in his shareholder letters and conference calls. With a Glassdoor approval rating of 98%, it is evident that his employees recognize his outstanding management effectiveness and character.

Furthermore, how a CEO manages adversity is a good indicator of leadership capabilities. In late 2018, the steep drop in digital currency prices caused crypto miners to purchase significantly fewer discrete GPUs. Nvidia’s management team lacked adequate visibility into how much of its revenue was from crypto. It turned out that the business was more exposed than management indicated publicly. The sudden drop in demand caused inventory excess. Thus, the company stopped shipments to allow the channel inventory to normalize again. As you can imagine, this caused a revenue reduction for a few quarters. Some investors felt misled and subsequently filed a class-action lawsuit. Although it is hard to know for sure, I think there is a reasonable probability that the sudden drop in GPU demand caught the executive leadership team off guard. The company did not have data regarding how its customers planned to utilize the GPUs. Once the situation became evident, management immediately communicated the situation to investors and then explained the future expectation for inventory channel normalization. The company then quickly shifted its strategy to mitigate the problem going forward. It did this by making its gaming processors less effective for mining by lowering the hash rate and creating CMP processors optimized explicitly for the activity. This strategy effectively added greater visibility and segmentation to its GPU sales while simultaneously alleviating the demand for its gaming GPUs. This sequence of events demonstrated management’s accountability and willingness to course-correct to serve its customers and investors better.

Fiscal Discipline

Management’s capital allocation record is stellar. For example, its seven-year average return on tangible capital is 29.06%. It is earning tremendous returns while using minimal leverage. It can pay off all of its debt with ~two years’ worth of free cash flows or all of the debt immediately with the liquidity on its balance sheet. It also has an EBIT interest coverage ratio of 42.55x. Therefore, the business has little default risk.

Insiders do not own a substantial portion of the company as they own only 3.72% of the diluted outstanding shares. Their overall transactional activity is generally neutral (no red flags) since they have not sold an unusual amount of equity or voluntarily bought any since 2020. It appears that most of the insider transaction activity is to convert equity provided as income into cash rather than compelled selling.

Nvidia has a reasonable executive compensation structure that balances long-term stock performance with operational efficiency. In 2021, the company paid the CEO $19.3 billion in cash and PSUs. It gave the other NEOs between $5-8.5 billion in salary, PSUs, and RSUs. The performance stocks are earned based on operating income and three-year total return relative to the S&P500.

Nvidia predominately uses cash flow for investing back into the business. It utilized 32% of cash flows in the last five years for strategic acquisitions. Meanwhile, return 20% of cash flows to shareholders with buybacks and dividends.

Three Reasons to Invest in Nvidia

Growing Large Barriers to Entry

Data is the new oil. Simply put, the demand for data and the accelerators that efficiently process oceans of bytes is skyrocketing. Nvidia is well-positioned to benefit. The private and public sectors realize the tremendous opportunities and advantages of harnessing the power of artificial intelligence. For instance, vertical industries are looking to cut expenses, build revolutionary products, and streamline processes through AI business automation, real-time virtual collaboration, smart manufacturing, and logistics. Hyperscalers need to consistently offer customers the best processors and enterprise cloud tools to stay competitive. Scientists are producing breakthrough discoveries and medical treatments at speeds faster than ever imagined possible. There is no company primed to capture this massive opportunity quite like Nvidia.

Unfortunately for the enterprise, the AI industry will continue to attract new entrants looking to take some of their profits. This phenomenon is the natural gravity of any industry with high returns on capital. However, the economies of scale that Nvidia is forming will continue to insulate it from these competitive forces. Its full-stack strategy has significantly increased the level of capital required to compete.

Let us go through the high-level components that a competitor would have to reproduce to produce a competitive solution in the marketplace. On the processor level, Nvidia built a proprietary triple threat with its GPU, CPU, and DPU. These chips complement each other. Nvidia designed the GPU to optimize throughput by leveraging thousands of threads to process many tasks in parallel. The company created the Grace CPU mainly to handle latency-critical applications. Critics are skeptical of Nvidia entering the space since Intel and AMD dominate it. This view is a misunderstanding of the strategic rationale to develop the CPU. According to Nvidia’s GM and VP of Accelerated Computing, the company’s intention is not to compete directly in the category but “rather, the system design will allow them to solve AI problems that are orders of magnitude larger than today’s.” In other words, it created Grace as a complementary fit within its system architecture. Instead of relying on a 3rd party chip, it can now offer its customers a product finely-tuned for its platform. Lastly, its advanced DPU chip is uniquely proprietary. It serves an essential job to offload all non-application processing to free up the CPU server to run the primary workloads best suited for the CPU.

While the company has poured billions of dollars of R&D into designing these processors, Jensen Huang regularly emphasizes that the model of computing power is no longer about any individual chip. Instead, it is about the distributed server at the data center scale. Without the support of a robust networking backbone, there will be significant bottlenecks even with the fastest chips in the world. Since the 2019 acquisition of Mellanox, networking is where Nvidia has a decisive edge over its competitors. As data grows exponentially, data centers will need to incorporate the lightning-fast I/O performance of Mellanox InfiniBand and NVLink interconnect technology to mitigate bandwidth constraints.

Lastly, Nvidia developed an incredible software layer on top of the hardware. The software is its differentiating characteristic. While people traditionally think of Nvidia as a semiconductor company, it has more software engineers than hardware. The business is also not new to the software market. Millions of developers use the CUDA platform the company developed in 2006. More recently, it has developed a comprehensive suite of SDKs, apps, tools, and services that facilitate the development of highly sophisticated AI applications. The company is ultimately working toward achieving its audacious goal to empower enterprise domain experts to leverage and build AI applications without writing one line of code.

A business that desires to outcompete Nvidia must either reproduce its assets to overcome economy of scale advantages or design a cheaper alternative technology that is an adequate substitute for its platform. Neither are simple pursuits. Looking at its balance sheet, one may falsely conclude that completely replicating its assets would take less capital than reality. Let us stipulate that Nvidia needs 1% of FY 2021 TTM revenues in cash for ongoing operations. Then only $269 million (1% * $26.9 billion) of the $21.2 billion of cash and cash equivalents is required. The total amount of assets needed to reproduce is $23.26 billion ($44.187 billion - $20.931 billion). However, this number understates the actual cost for two reasons. The first reason is that GAAP accounting does not capitalize R&D costs as investments. Instead, these costs flow through the income statement as expenses. Since the company’s R&D investment benefits over multiple years, this deserves an adjustment to capture the investment adequately. Assuming a useful life of 3 years, the investment for both companies comes out to $5.34 billion ($4.82 billion + $522 million).

This calculation still understates the actual cost of reproduction since both Mellanox and Nvidia are likely to use their capital more efficiently than a potential entrant with less experience. There are hidden learning curve costs that a company outside looking in would have to bear. Thus, let’s assume that it would take an enterprise in an adjacent industry 25% more capital and at most 75% for a company with less high-performance computing experience. This addition gives us a range of $6.68 - $9.35 billion for total R&D investment.

Finally, we have to add marketing outlays required to penetrate the market successfully. Although not simple to estimate, I will set a range between 3-5 years worth of Nvidia’s future marketing spend. I will also assume that Nvidia allocates half of its SGA toward marketing. For the past five years, the company’s SGA revenue expenditure has been roughly 10%, so marketing is 5%. The company’s five-year revenue CAGR is 27%, but I will lower this growth rate to 15% for the sake of conservatism. Thus, the marketing expenditures required would be $5.38 - $10.45 billion.

In total, this brings us to $35.32 - $43.06 billion. Considering Nvidia’s customer captivity and institutional penetration, this estimate may still understate the entire investment required.

Elite Management

Six years after its genesis, the company invented the GPU and introduced the world to the power of programmable PC graphics. Subsequently, Nvidia went on to dominate the graphics processing industry for gaming. Amid this success, Jensen and his team realized something profound. They could apply the same processing horsepower that renders video games to accelerate general-purpose computing across many industries. In other words, he unearthed a massive gold mine that was right underneath him. However, to pull off such a plan required intense operational focus and exceptional leadership. This CEO was the man for the job. As explained earlier, the business dominates gaming and the professional visualization and high-performance computing sectors. It is also developing a foothold in the rapidly rising autonomous vehicle industry with substantial design wins from well-established OEMs, first-tier suppliers, and prominent AV startups, including those specializing in trucking and RoboTaxis.

This success would not be possible without Jensen’s intense passion for building his company into one that transforms the world. It is a passion that is not driven primarily by the pursuit of money. You cannot find this level of determination is an intangible asset on its balance sheet. While it is hard to find leaders who can come close to matching the impacts of phenomenally successful CEOs such as Steve Jobs, Bill Gates, and Jeff Bezos, I believe that Jensen Huang is well on his way. For instance, the numbers show that he and his management team are superb capital allocators with a seven-year average Return On Capital Employed and Return on Tangible Equity of 29.06% and 38.34%, respectively. As mentioned earlier, the CEO achieved this capital efficiency without using extensive debt.

Ultimately, Jensen’s historical performance, relentless passion, brilliant leadership, and priority of long-term execution over short-term quarterly results is a rare combination to find. Jensen’s leadership acumen and capital allocation capabilities augment the business’ margin of safety and reduce the chance for permanent impairment of capital.

Multiple Powerful Drivers of Growth

Another compelling reason to own this business is that it has multiple engines of growth. Many companies with deep moats only have one business executing extraordinarily well. There is nothing wrong with this characteristic. All things equal, a company that focuses is generally more effective than one that does not. The downside, however, is that a single-engine business is entirely dependent on that segment to perform. If conditions deteriorate, it has no other revenue stream to fall back on. Nvidia is not in this position. While its gaming and data center businesses comprise 85% of the total revenue stream, it is an incredibly diverse business under the hood.

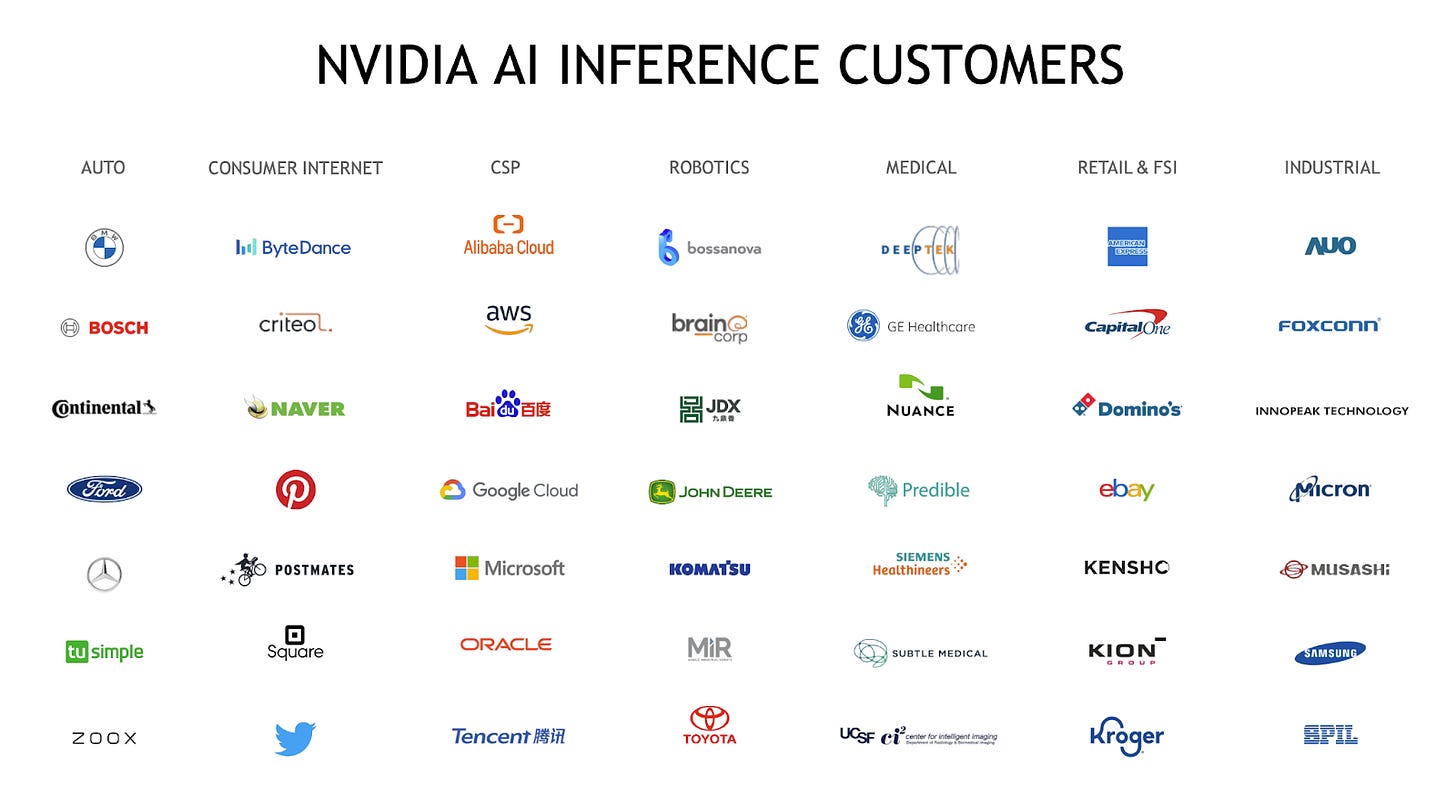

Its data center business has an incredibly diverse base of end markets that it serves. It includes hyperscalers, cloud providers, vertical industries, the public sector, and edge data centers. It partners with every major cloud service provider globally. It sells to enterprise customers in various sectors such as financial services, telecom, transportation, oil and gas, higher education, health care, manufacturing, and logistics. To understand its dominance, one can simply look at the top supercomputers in the world. For example, ~68.4% of the top 500 most powerful computer systems globally, including 70% of all new systems, use Nvidia technology. This achievement is remarkable.

Until recently, Nvidia primarily monetized the physical hardware and provided all the software for free. However, the business model evolves with its new set of value-added services. Nvidia Base Command, Fleet Command, and AI Enterprise services facilitate AI data analytics, application development, and large-scale deployment for AI scientists and developers. It is compatible with the same VMware vSphere virtualization that hundreds of thousands of IT departments already use today, effectively bridging the gap between IT infrastructure and engineering. Management consistently underscores its VMware partnership as an essential mechanism to encourage widespread adoption and increase customer stickiness. While it is still in its early days, this is an exciting monetization opportunity ahead and is the next phase in the transformation into a full-stack computing platform.

The gaming segment is a cash cow. With a stellar seven-year CAGR of 29.34% through the end of 2021, its performance speaks for itself. The company offers high-end discrete GeForce Graphics Cards, gaming laptops, SHIELD TV devices, and the GeForce NOW gaming streaming service. It is massively benefitting from a significant tailwind in e-sports. Serious gamers worldwide compete to win massive sums of money while speculators on platforms such as Twitch join in on the fun. According to the CFO, Collete Kress, the audience for global eSports is approaching 500 million people with over 700 million live-streamers. This dynamic encourages gamers and speculators alike to purchase the most performant gaming rigs. This industry dynamic is turning out well for Nvidia. As noted earlier, its market share is phenomenal in a gaming GPU market that some analysts expect to grow at a compounded rate of 14.1% through 2026. Its relatively nascent GeForce NOW “Netflix for gaming” service is growing fast as it recently doubled YOY to reach 14 million gamers. Analysts should pay more attention to this opportunity since GeForce NOW is a SaaS model that offers incredible value to a massive addressable market. The unique value proposition for gamers to avoid purchasing expensive hardware by paying a monthly fee is enticing. The unit economics is appealing. Unlike a competitor, such as Google Stadia, it can source the GPUs in-house. Nvidia’s gross margin is currently 65%. If hardware is 50-70% of the total gross operating cost, then Nvidia can charge 32.5-45.5% less than its peers (competitor GPU % of gross expense x Nvidia’s gross margin) and maintain the same unit profit.

The Professional Visualization segment is less than 10% of total revenues. It serves many important end markets such as media and entertainment, architecture, scientific visualization, engineering, and construction. It boasts a 90%-plus market share in workstations and is increasing its TAM through several tailwinds such as AR/VR, mobile workstations, and its Omniverse platform for simulating physically-realistic worlds. While it may sound like a massively over-hyped fad with a high chance of failure, the product solves legitimate business needs. For instance, companies can use it to train robots, generate interactive AI avatars and personal assistants, design operationally efficient factories, and enable employees to collaborate in a virtual space. Packaged as an enterprise subscription service, the Omniverse is receiving lots of attention, with over 70 thousand downloads from individual creators and 700-plus businesses evaluating it for operational use. There is an opportunity here for locking in customers with high switching costs if companies eventually integrate heavily with Nvidia’s Omniverse proprietary SDKs and libraries. While this has enormous potential if the company can execute, I am not considering any value for Omniverse in my valuation model.

The Automotive segment is currently only 2.4% of the total revenue. Historically, the company has sold millions of vehicle infotainment, navigation, and virtual cockpit systems to a broad range of automakers. The business has yielded mediocre results thus far, with a five-year CAGR of ~3%. However, the company is investing heavily in this area for the long term. For example, the Drive platform is an autonomous vehicle stack for delivering an end-to-end self-driving experience. The solution offers a comprehensive collection of testing, prototyping, and deployment tools. Thorough testing is critical for corporations racing to develop level 4 or 5 autonomous vehicles since the car must intelligently account for nearly every scenario on the fly. While iterative testing on real roads and highways is necessary, it is inadequate. AV companies need to simulate and test an extensive range of data points that are difficult to replicate consistently, such as the thousands of types of debris and obstacles found on the road. Nvidia understood this hardship and created a proprietary simulator named Constellation that solves it by rendering a realistic virtual world.

Drive’s architecture is open and modular enough that a partner can work with Nvidia at any level. It is not an all-or-nothing relationship. The strategy is producing fruit as the company is currently working with roughly 250 partners ranging from OEMs, Tier 1 suppliers, Trucks, robotaxis, sensor manufacturers, and software-focused businesses. It secured landmark deals with Mercedes and Jaguar Land Rover. These brands will ship Nvidia Drive technology with their new fleets and generate hardware and recurring software sales in 2024 and 2025, respectively. There is a good chance that a growth inflection point is coming soon, considering an $8 billion design win pipeline in the next six years.

Thesis Inversion

Fast Pace of Technological Innovation Weakens Competitive Position

With a growing estimated computing AI TAM of $100 billion in edge computing, HPC, enterprise, and inference, competition will only continue to increase. The GPU is not the only show in town. There are other types of accelerators such as CPUs, ASICs, TPUs, and FPGAs. Money is pouring into the sector with the rapid rise and proliferation of machine learning applications. This investment has caused an explosion of new and exciting silicon chips. Traditional semiconductor businesses like Intel, AMD, and Xilinx invest heavily in developing AI processors. The major cloud companies vertically integrate backward and produce specialized silicon, such as Google with the TPU and Alibaba with the Yitian 710. Cloud businesses have a compelling incentive to build proprietary infrastructure to reduce costs at scale and forge their competitive advantages around computing power.

Also, while Nvidia dominates training, AI inference is an entirely different use case where it does not boast nearly the same hardware advantage. If Nvidia fails to capture the inference market, it could make the company vulnerable to losing AI leadership. According to a Mckinsey Research report, it projects that the inference market will be more than twice the size of the training market in 2025. The research firm also asserts that, while GPUs are optimal for handling training applications with higher throughput requirements, the architecture is not conducive for inference. Companies often prefer CPUs, ASICs, and FPGAs for their low latency superiority for real-time decision-making. These chips are often better fits with superior performance, cost, and power-efficiency specs. While not as customizable as CPUs, engineers specifically design ASICs to process a limited set of computations extraordinarily well from a performance and power consumption standpoint. Thus, ASICs are generally sufficient for the job. The Lead of Google AI, Jeff Dean, wrote a paper named “The Deep Learning Revolution and Its Implications for Computer Architecture and Chip Design” in 2019. In the report, he asserts that, while ASICs take around two years to develop due to design complexity, machine learning will potentially reduce the time frame from many months down to weeks.

“This would significantly alter the tradeoffs involved in deciding when it made sense to design custom chips because the current high level of non-recurring engineering expenses often mean that custom chips or circuits are designed only for the highest volume and highest value applications.”

Jeff Dean

A new method to speed up the ASIC production cycle and optimize the designs will improve their economic attractiveness. McKinsey ultimately believes that CPUs and ASICS will eventually command a combined 90% inference market share. As enterprises gain confidence in their trained AI models for production use, they will increasingly shift resources toward inference. If McKinsey is correct, this means fewer future AI capital expenditures budgets allocated to purchase Nvidia’s products.

Although Nvidia recently unveiled its Grace CPU specifically designed for the data center, the venture is not guaranteed to succeed. It is an uphill battle to convince customers to buy its first-generation CPU over Intel and AMD, which have dominated this market for decades. Nvidia not successfully extending its leadership into inference and defending its current training position is a considerable risk. In that case, the switching costs that it seeks to cultivate will never entirely come to fruition.

Lastly, Nvidia’s unified architecture is a double edge sword. Operating leverage, just like financial leverage, can work against a business. Michael Porter spoke about this concept in-depth in his book, Competitive Strategy: Techniques for Analyzing Industries and Competitors.

“Technological change may penalize the large-scale firm if its facilities designed to reap scale economies are also more specialized and less flexible in adapting to new technologies. Commitment to achieving scale economies by using existing technology may cloud the perception of new technological possibilities or of other new ways of competing that are less dependent on scale.”

In other words, if a competing solution is compelling enough to threaten its market share, Nvidia’s current architectural concentration could quickly transform its assets into liabilities. The industry can force its hand and cause the company to shift its focus toward an entirely different technological paradigm. In that case, its scale would work against it and makes it challenging to stay nimble enough to pivot successfully.

Commoditization Risk

Any undifferentiated product is at risk of turning into a commodity. Technology is specifically vulnerable to the commoditization process since innovation moves quickly. Once a company invents a cutting-edge product that gives it an edge in the market, history tells us that the natural progression is for competitors to attempt to clone and catch up. For instance, in the late 1990s, IBM figured out a method to use copper wiring in integrated circuits. IBM spent plenty of money and undertook years of research to accomplish this. Unfortunately for IBM, competitors copied it just two years later after its supplier partnered with them.

Additionally, the technological leader must allocate a significant amount of its capital toward R&D expenses to innovate. The business that copies can do so through less expensive means such as poaching employees from the firm, working with suppliers, or reverse-engineering to replicate an existing product. Nvidia may experience a similar dynamic. The competitive landscape is fierce as substantial capital is flowing into semiconductor companies. According to PitchBook, Venture Capitalist firms have invested over $11 billion into United States chips startups since 2012. The race to develop the best chips in the world constantly keeps Nvidia on its toes. Rapid industry innovation increases the chance that peers create similar products and services that would preclude Nvidia from demanding a hefty premium in an increasingly fragmented environment.

Inversion Rebuttal

Full-Stack Platform Strategy Mitigates Technological Risk

Nvidia will indeed need to innovate perpetually to maintain leadership. However, this reality has not stopped a franchise like Apple from dominating. For years after releasing the iPhone in 2007, critics asserted that its failure to invent cutting-edge technology significantly diminished its future chances of success. This argument failed to consider the considerable switching costs it cultivated through its ecosystem and the consumer brand positioning that it possessed. Nvidia suffers from a similar misunderstanding. For example, it is not generally cheap nor easy for its engineers to port their AI workloads to another ecosystem. This dependency makes it beneficial for its partners to stick around even if the technology falls slightly behind at any given point in time. Further, Nvidia’s large R&D budget and phenomenal track record of innovation can reassure its partners that it will quickly close a technological gap.

Although Nvidia has a larger market share in training than inference, it is gaining substantial traction in the latter. The company is spending considerable resources to beef up its inference capabilities. This investment is starting to pay off as its inference revenue more than tripled year-over-year in Q4 2021. Over 25 thousand companies use Nvidia AI inference, including Capital One, Snap, Samsung, Block, and Siemens Energy.

Nvidia’s Triton inference server software is a highly optimized cloud and edge inference solution. The enterprise continues to see increased adoption. For example, several major cloud providers support it, including Amazon, Google, Microsoft, and Tencent. Nvidia’s A100 data center accelerator offers tremendous horsepower for training and inference. Management underscored an industry trend in which companies migrate from CPUs to GPUs for AI inference. The CEO believes that this shift is happening for two main reasons. The first is that its GPUs can keep up with the increasing scale and complexity of deep neural networks while maintaining the low latency responsiveness necessary for inference. Until recently, the industry could rely on Moore’s Law, where CPU performance doubles every two years due to the perpetual growth in the number of transistors per integrated circuit. Jeffrey Dean pointed out that we are now in the post-Moore’s Law era in which performance will double only every 20 years. Unfortunately, this decline is coinciding while computational demand from AI is simultaneously accelerating. Secondly, the full-stack platform simplifies the complete development lifecycle’s intricacies, including prototyping, developing, and deploying production-ready inference applications. Jensen says it achieves this by “supporting models from all major frameworks and optimizing for different query types including batch, real-time and streaming.”

Nvidia is not dismissing the CPU. Instead, it is embracing it as a core element of its ecosystem. It recognized the importance of the chip by designing its own data center server CPU and incorporating it into its three-chip strategy. The company does not intend to build a commodity chip and directly compete with AMD and Intel. Instead, Nvidia engineered it to integrate specifically with its GPU infrastructure and support large-scale high-performance computing workloads.

Through the unified architecture strategy, Nvidia concentrates on designing a generalized AI operating system that employs state-of-the-art accelerators to do the job. Management believes that the data center industry is moving toward prioritizing architecting distributed workloads across a swarm of nodes rather than focusing primarily on the processing power of a single chip. Thinking about these problems at scale is where Nvidia has an advantage. While specific processors that solve narrowly defined use cases can offer fantastic performance, they often come at a cost. Nvidia’s chief scientist, Bill Dally, fleshed out the pitfalls of this strategy: “If you over-specialize it for the networks today, by the time it actually comes out, you’ve missed the mark. So you have to make it general enough that you can track really rapid progress in the field.” In the same interview, the company’s Senior VP of GPU Engineering added: “Think about someone in a data center. Are they going to want to buy it if it’s only good at doing one thing? Once they put that chip in their data center, it is going to be there for at least 5 or 10 years, whether they’re using it or not.” These specialized processors do not offer customers the best bang for the buck in a fast-paced tech environment where chip design projects can take roughly two years to complete. At that point, the life span of the chip must last at least three for economic viability. Over-specializing is often not the optimal choice for decision-makers and practitioners despite the upside. Thus the generalized solutions that offer multi-generational software support, backward compatibility, and robust performance at a reasonable price are going to win out.

Platform Strategy Creates Unmatched Value

Nvidia’s ecosystem of products and services creates a unique value proposition not found elsewhere. It manifests value through an excellent gross margin that has risen steadily. For example, in 2012, it was 52.02% and rose to 64.93% FY 2021. This metric should continue on its trajectory, especially as SaaS sales begin to gain traction. Selling these services also encourages additional hardware revenue since they only work with Nvidia gear. Regardless, the business should maintain robust margins through its tollbooth moat. Corporations will need to pay a premium in such a competitive environment since they do not have many options other than Nvidia to develop end-to-end AI capabilities.

Through its extensive three-layer model of customer engagement, Nvidia has created this outstanding value to drive consumer adoption and increase stickiness. Its foundation is ready-to-use hardware systems (HGX, EGX, and DGX) that integrate advanced Mellanox interconnect technology. The second layer comprises a robust collection of low-level software to accelerate computationally demanding applications. It includes CUDA acceleration libraries, models, and frameworks. The top layer is a suite of end-to-end application frameworks that enable multi-billion dollar AI use cases such as conversational AI, robotics, video conferencing, and recommendation systems at the data center or edge.

Management’s big audacious goal is to extend this platform further and revolutionize how enterprises conduct business. The CEO outlined his vision to enable non-technical specialists across various fields to build solutions using Nvidia AI without writing a line of code. This capability would save its consumers money since they could hire fewer engineers.

Bottom Line

In summary, Nvidia possesses the qualities of an enduring capital compounder. The company has built a fortress composed of three synergistic moats that shield its business from the competition. It sells its products and services to a wide range of end markets to the world’s largest firms, all within its unified architecture paradigm. This model gives the business tremendous operating leverage and costs savings that will only increase with scale. The main driver behind these characteristics is the founder-led executive team with a phenomenal capital allocation record, conservative debt profile, and strong execution history.

Nvidia has a long runway for growth. It is on my investment wishlist as a wonderful company. I would not hesitate to buy it at the right price.